I am doing some volunteer work this week trying to help a non-profit process some data that they get on a regular basis. The process is relatively simple but time consuming involving a three step process.

Step 1, record and package medical data into a zip file. This zip file is uploaded to a Dropbox folder for a doctor to interpret the data. Unfortunately the software package that the doctor uses does not deal with zip files and needs the file unpacked. Automating the unzip process when a zip file is dropped into an /Incoming folder and dropping it into a folder assigned to the doctor would be helpful. There are some issues based on the unzip happening on MacOS or Windows that creates issues for the interpretation software so moving this to a Ubuntu docker instance will hopefully solve the problem.

Step 2, a doctor interprets the data and the data is written into a couple of folders. Once the data is properly sorted it needs to be stamped with a watermark and necessary additional documents attached on subsequent pages if follow up tests are requested. The files are in pdf format so overlaying a watermark or stamp on a pdf file needs to be automated because it is very time consuming and a tedious manual process.

Step 3 is returning the results to the patient or facility that recorded the data. This is typically done with a secure link and secure email communication. The message is custom based on the results of the test or tests so it really can’t be automated but the sharing of test results can be automated.

To solve this problem packaging everything into a docker image and creating a custom python script or java script that is uploaded into the docker image allows us to control the distribution as well as code control.

To build a docker image we just need to create a Dockerfile that loads the latest Ubuntu image and overlays curl, unzip, python3, and python3-pip packages. Once we have the base image created we can upload a python file and either execute the script to download the zip file, unzip the contents into a folder and upload this folder back to dropbox. For the Dockerfile we can use

# Create latest ubuntu instance

FROM ubuntu:latest

#

# label to document

#

LABEL maintainer=”pat@patshuff.com”

LABEL version=”0.1″

LABEL description=”test of unzip file in Dropbox”

#

# update and install unzip and Dropbox CLI

#

RUN DEBIAN_FRONTEND=noninteractive

RUN apt update

RUN apt install -y tzdata

RUN apt install -y curl unzip gnupg software-properties-common openjdk-8-jre-headless python3 python3-pip vim

RUN cp /usr/bin/pip3 /usr/bin/pip

RUN cp /usr/bin/python3 /usr/bin/python

RUN pip install dropbox

COPY test.py /tmp/test.py

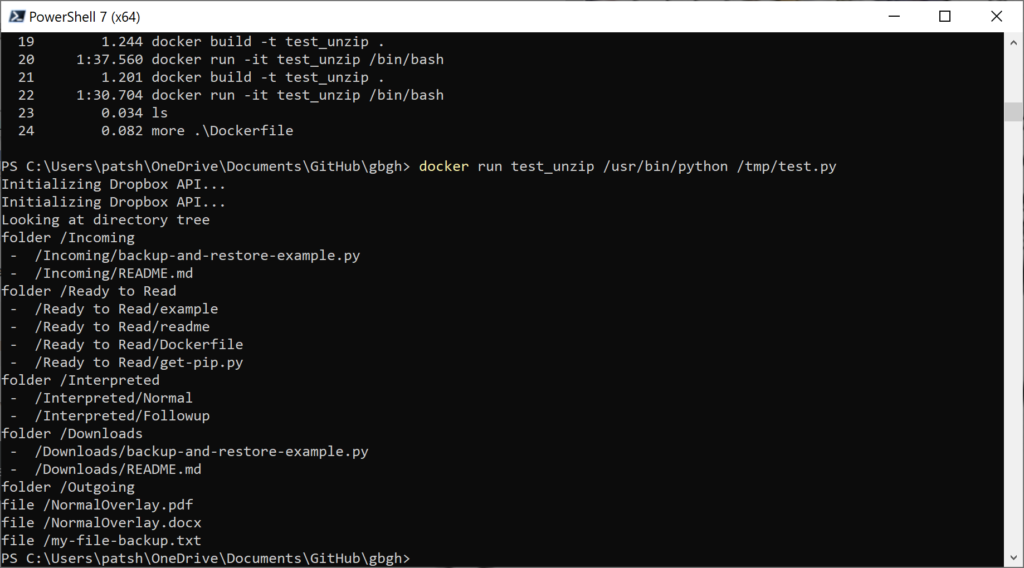

If we look at this file we load from ubuntu and run some apt commands. We start with the apt update to make sure we have the latest patch. From there we set the timezone data to prevent inputs being required for the software-properties-common package installation. The final installation pulls in a variety of packages which include the unzip and python3 binaries. Once we have everything configured we pull the latest dropbox API libraries for Python and upload our test.py file from our desktop to the /tmp directory. Our users will never see this complexity and can run a simple command line once everything has been build and packaged with docker. The result can be run on MacOS, Windows, or a PowerShell command line in the cloud.

Building this is relatively simple with a

docker build -t test_unzip .

With this command an image is created labeled test_unzip that we can run from any system with a docker binary (Windows, MacOS, Azure Container, etc). We can push this to a docker hub account so that it can easily be pulled to any machine or cloud instance that we have access to. We can run the binary with

docker run -it test_unzip /bin/bash

to get an interactive command line or

docker run test_unzip /usr/bin/python /tmp/test.py

to automate our python script. The beautiful part of this is that all of the complexity and logic is stored in the test.py file to automate the unzip part and the user just needs to open a command line and execute the docker run command to unzip the files. Secondary commands can be run with a different python script or java script and we should not need to change the base docker image to change functionality. Once the execution is complete, the docker image terminates and minimizes our cost in the cloud or performance impact on our desktop.

The test.py file is relatively simple. The two key elements in the python file are the connection to dropbox with the connection using a user generated token to access a folder. Once the token is generated for access from the http://dropbox.com/developer website it can be incorporated into the code

dbx = dropbox.Dropbox(“<token>”)

or passed in from the command line to make the code a little more secure. Once we have the dropbox connection we can navigate the folder entries by getting a folder list and testing to see if the file name is a folder or an actual file.

result = dbx.files_list_folder(path=””)

for entry in result.entries:

if isinstance(entry, dropbox.files.FileMetadata):

print(“file”,entry.path_display)

else:

print(“folder”,entry.path_display)

result2 = dbx.files_list_folder(entry.path_display)

for entry2 in result2.entries:

print(” – “,entry2.path_display)

This code uses the file_list_folder to get the current contents of the root directory. If the entry is a dropbox.files.FileMetadata then the file is an actual file. If it is not a file we assume that it is a folder and drop down one more level to look at the file entries that are one level deeper. If we execute this code with the docker run command we get the following output.

Automating the unzip process should be a simple case of finding a zip file in the Incoming folder, downloading it to the Ubuntu instance, unzipping it into a folder and uploading the contents of that folder into the Ready to Read folder on Dropbox. The eventual goal is to generate a Docker image, push it to an Azure Container Repository and trigger launching the run command when a file is uploaded to a storage container rather than Dropbox. We still want to push the unzipped files to Dropbox since this is the way that the doctors get the data to interpret. Once we have this done we can work on automating the stamping and communicating results part into a second python script based on the same Docker / Azure Container image.