One of the nice things about a Raspberry Pi (any version) is the ability to program from the command line or a development environment (IDE). In this posting we will look at some of the programming languages that are pre-installed with the default operating system and how to add a couple more as desired.

Python

Python is a relatively simple programming language. From https://www.python.org/doc/essays/blurb/ the language is

“Python is an interpreted, object-oriented, high-level programming language with dynamic semantics. Its high-level built in data structures, combined with dynamic typing and dynamic binding, make it very attractive for Rapid Application Development, as well as for use as a scripting or glue language to connect existing components together. Python’s simple, easy to learn syntax emphasizes readability and therefore reduces the cost of program maintenance. Python supports modules and packages, which encourages program modularity and code reuse. The Python interpreter and the extensive standard library are available in source or binary form without charge for all major platforms, and can be freely distributed.”

What this means in a nutshell is that Python is easy for building complex things from simple constructs. You can create a description of something like a vehicle and assign a number of wheels to. You can write routines to drive each wheel and potentially turn each wheel simply and easily. The modules and packages allow you to take work of others and use them without having to write everything for yourself. Documentation on Python can be found at https://docs.python.org/3/contents.html and chapter 6 details how to define and import modules https://docs.python.org/3/tutorial/modules.html# so that you can leverage the work of others or share with others.

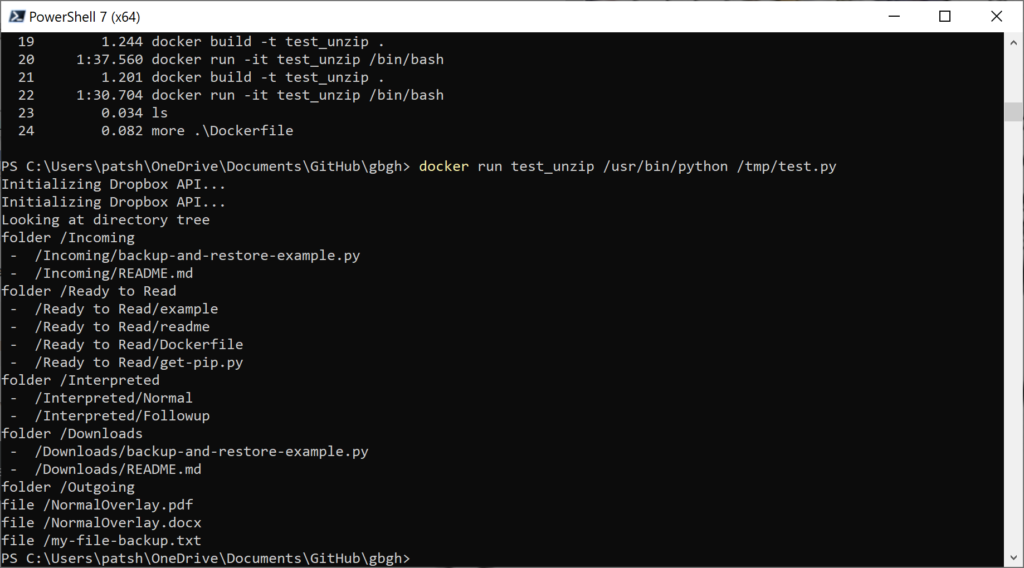

To test what version of Python is installed on your system, open a command prompt and type

python –version

The command prompt should return a version of level 3 or higher with a sub-version following the dots. To execute a program you can type python to invoke the interpreter and type python commands into the interpreter. The code will be executed as you type it.

The command print() prints to the console what is between the quotes. The exit() command exits the interpreter and takes you back to the command line. Alternatively, you can enter the print and exit commands into a file and type python filename to execute the stored program. Traditionally a python file uses the “.py” extension so if we called our file Hello.py we would type

python Hello.py

It is important to note that capitalization is important on a Linux (or Raspberry Pi) system so make sure the file name and what you load into python match exactly. It is important to note that Hello.py and hello.py are two different files.

C and C++

The C and C++ programming languages are a little more structured than Python and is more useful when trying to define something as an object rather than a thing. If, for example, we wanted to describe a vehicle and the vehicle could be a bicycle, a tricycle, a car, a boat, or a rocket ship we could define how to make it go or make it turn using a common subroutine. The subroutine could then determine that the vehicle is a rocket ship and cause the engines to ignite as opposed to turning a motor on the back wheel to get the bicycle to go. C and C++ are higher level programming languages than Python and a little more difficult to program and learn. Fortunately, they are also more powerful and can do more things. Most operating systems are written in C and C++ allowing you to do things like addressing physical devices on the computer and easily controlling them. The GPIO (general purpose I/O) on the Raspberry Pi can be controlled from a command line using the pinctrl command. This command written in C and talks to the operating system to manage the GPIO pins.

Programs written in C and C++ must be compiled from a source file, typically ending in a “.c” extension. The tool that compiles these programs is default installed on the Raspberry Pi in the form of the gcc compiler.

Compiling a program either generates an object file or an executable. We will start with a simple example as we did with Python but save it as Hello.c instead of Hello.py to tell the compiler that this is a C or C++ file.

#include <stdio.h>

int main() {

printf(“Hello World!”);return 0;

}

Note the first statement is an include of the stdio.h file. The “.h” extension defines things for the compiler. In this instance it defines that we are looking for a subroutine called main() and will be calling a subroutine called printf(). The main routine is the entry point for our code when we execute our compiled code. We can call it as an integer (the int prefix for main) or static void main(). Having main as an int function means that it return something to the command line when it finishes. If everything works as planned it typically returns a zero as shown in the code. If there is an error something else is returned and the shell language at the command line can do something different on a non-zero return. The printf() subroutine can take a string or combination of strings and variables and print it to the console. In this case we print a simple string.

Compiling the code typically takes two phases. The first phase generates an object file that can be combined with other object files to generate an executable. The -c option for gcc generates a “.o” file extension. If we had multiple object files, as in the case with multiple subroutines or object definitions, we can string them together and generate an executable. If, for example, we wanted to define how to drive a bicycle we might have a file called bicycle.c, turn.c, go.c, and stop.c. The turn subroutine would exist in the turn.c file and get compiled into a turn.o object definition. The same to generate a go.o and stop.o object definitions. We would then use the -o option to tell the compiler to generate a bicycle executable from all four object files.

In this example we compile all of the source files to object files using the “-c” option then combine all of the object files into an executable using the “-o” option. If we type file followed by the file names the operating system shows us what type of file is generated. The “.c” file is a C source file. The “.o” file is a relocatable object compiled for the Raspberry Pi. Finally, the bicycle file is an executable file ready to run on the Raspberry Pi.

The benefit of putting the go, stop, and turn modules in a separate file is that we can define how to make a bicycle go in the go file and this same file can then be used again to make a tricycle go or a car go by expanding the way that the go function operates. Given that a bicycle and tricycle are started by peddling the code can be “reused” for a different object. For a car we have to turn on the engine, put the car in gear, and press the accelerator so the go operation is a little more complex but can be defined in the same file to make development and reusability easier in the long run.

Java

The Java programming language was developed many years after the C and C++ languages and was written to overcome some of the problems with those languages. Java is an object oriented language but the intent is that code is portable from one machine to another. If something is written for a Macintosh it can run on a Windows PC or on a Raspberry Pi with little or no modification.

Unfortunately, Java is typically not installed on the Raspberry Pi and needs to be installed.

To install java the apt-get command can be executed as root to install the compiler and java execution foundation on the Raspberry Pi.

The sudo command allows you to run as root. Your username needs to be in the /etc/sudoers file to allow you to run commands as root. The apt-get command tells the operating system that you are doing something with packages for the operating system. The install option says that you want to install a software package from the internet. The default-jdk tells the command that you want to install the latest java development kit onto your computer. Once you type Y the installation begins.

Once the installation is finished we should be able to type in java –version and see what version of the java development kit was installed.

Using our previous example, we will print Hello World! from java using the following code

class HelloWorld {

public static void main( String []args ) {

System.out.println( “Hello World!” );

}

}

In C/C++ we defined a main routine that was the start of our program. In Java we do the same thing but need to define it a little differently. In Java we have to define main() as a public subroutine that can be called from the operating system and have it accept input variables so that we can pass in parameters from the command line. Rather than using the printf() routine we use the System.out.println() routine. This routine prints a string or a combination of strings and variables to the console (System.out) then exits. Note that there is no return value as there was in our other program because we defined the routine as a static void which means that it does not return anything. We also did not include the stdio.h definition to understand the print subroutine. Java makes assumptions that you will be reading and writing from the system and don’t need to include those definitions. The java interpreter takes care of going form the compiled code to the proper operating system.

To compile Java code we type in javac Hello.java. This generates a “.class” file which can be taken to other machines and executed. We don’t need to recompile our java code for a different operating system or chip architecture. Note that the output of our javac is HelloWorld.class even though we had everything stored in Hello.java. This is caused by our class definition of HelloWorld. Had we named it HelloMom it would have written a HelloMom.class file. To execute the code we type java HelloWorld which reads in the HelloWorld.class file and jumps to the main() function definition.

Note that the .java extension is listed as a C++ source file but is really a Java source file. The .class extension is the compiled object that can be executed from the command line with the java command. Also note that there is no binary generated as there was done with Python or C/C++. If you have multiple files that are needed as we did with the bicycle example we would list all of the class files behind the java command to include all of the class files.

In summary, the default languages that can be used to program your Raspberry Pi include:

- shell programming language

- Python

- C/C++

- (optional) Java

The first three are default installed and Java can be installed with the apt-get command. Other programming languages are available so this is not a complete list. Most are installed as we did with Java. Moving forward we will look at all four of these languages for program examples and the Raspberry Pi.