In this tutorial we will develop a simple Alexa skill using the development tools and Lambda services. We want to build a simple application that looks at the current date and says how many days until a target that we define. The configuration is static and self sustained and does not require on any other services.

First, we want to log into https://developer.amazon.com

From here, we click on the Alexa link to get access to the development console.

Click on the DEVELOPER CONSOLE button at the top right of the screen. This will take you to an authentication screen (assuming that you have signed up for the development tools for your account).

This takes you to the developer console.

Click on the Apps & Services button at the top left.

Click on Alexa to get the Alexa development console.

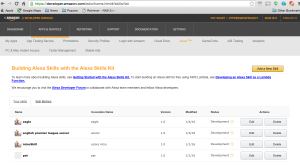

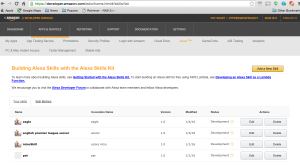

Click on the Alexa Skills Kit to see the skills that you have defined. It is important to note that these skills are available for the account and only the account that you logged in as. When you setup your Amazon Echo you associate an account with the echo. This linkage allows you to test these apps (called skills) on your Echo before releasing to Amazon for wide distribution.

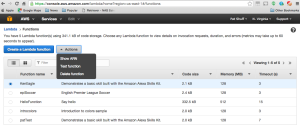

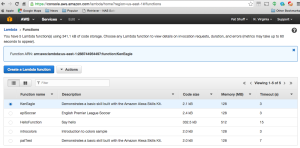

When you click on the Skill Kit you see a list of skills that you have defined. From here you can create new skills and edit old ones. In this example we have four skills that we have developed; eagle, English premier league soccer, introSkill, and pat.

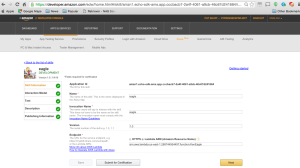

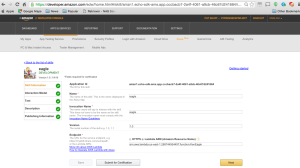

If we click on the edit button to the right of eagle, we can look at how we have defined the eagle skill.

In this example, we name the skill eagle and it is invoked by saying “Alexa, load eagle”. We also link this service to a Lamda service identified by the Amazon Resource Name arn:aws:lambda:us-east-1:288744954497:function:KenEagle

The second thing that we need to define is the instances, slots, and utterances for this skill. This is done by clicking on the Interaction Model on the left menu system. Note that we have two things that this skill will report. The first is the number of days until Ken’s 18th birthday which is the last day that he can get his Boy Scout Eagle Ranking. The second is the number of days until he graduates from high school. For both of these the invocation of the skill announces the number of days until both events. If you ask for eagle, it just reports the number of days until his 18th birthday. If you ask for graduation, it just reports the number of days until his graduation ceremony.

Note that we have two subroutine calls that are made; EagleIntent and GraduationIntent. These are two routines that are defined in the Lamda service.

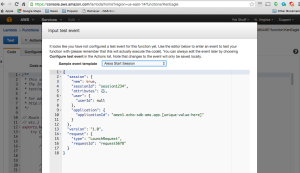

The Test tab allows us to enter the text translation of an utterance and see how the system responds. Note that we get not only what will be said with the outputSpeech but also the card that is displayed in the app that runs on your phone or tablet. In this example we used the utterance “eagle” to see what it responds with.

In the Description tab we look at a simple and longer description of the skill that will be used when you publish this service. This information is not critical if you are self publishing and have no intent to share this skill with others.

We also include a couple of icons for a small and large logo associated with this skill. We can also define more information that Amazon needs to figure out if this skill should be shared with others and how it might work before they certify the skill.

It is important to note that the last screen is not needed if you have no intentions of publishing your apps and only want to run it locally on your personal Echo.

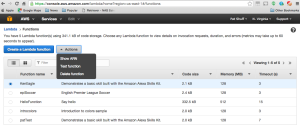

The second part of the code is the Lambda Service. The Lambda Service is managed from the aws console, https://console.aws.amazon.com.

Click on the Lambda console.

In this console, we see Lambda functions that we have created. We can click on the radio button to the left of the name and perform a variety of actions on the function. One is to look up the ARN which we needed for the Alexa Skill Kit.

Clicking on the Show ARN gives us the unique identifier that we need.

Clicking on the Test Function pull down allows us to select a standard test to run our code if we want to. This is the easiest way to test the initial invocation routine that is run if you launch your skill without any invocations or utterances initially. For example, if I say “Alexa, load eagle” it will run the Alexa Start Session routine which corresponds to the onLaunch() function.

There are five things that you can do with this user interface. The first is edit your code for this skill. We will dive into the code in a bit but the initial screen does show you the inline code that you create.

The second option that we have on this screen is the Configuration options. Here we define that the system that we are defining is invoked with index.handler so we need to define a handler in our code. We are also associating a user role to this function allowing it to run as a lambda routine on the Amazon Lambda Services. We select the lambda_basic_execution role.

The Event sources associates how the function is called. We Add an event source and select the Alexa Skills Kit to invoke this Lambda function.

We don’t really use the API endpoints or Monitoring for this application. We get 1,000,000 requests a day for our service. Based on what we are doing (on one device) we should never get that many invocations and are not calling this service from other services.

In the code section, the first thing that we do is define a handler

// Route the incoming request based on type (LaunchRequest, IntentRequest,

// etc.) The JSON body of the request is provided in the event parameter.

exports.handler = function (event, context) {

try {

console.log(“event.session.application.applicationId=” + event.session.application.applicationId);

/**

* Uncomment this if statement and populate with your skill’s application ID to

* prevent someone else from configuring a skill that sends requests to this function.

*/

/*

if (event.session.application.applicationId !== “amzn1.echo-sdk-ams.app.[unique-value-here]”) {

context.fail(“Invalid Application ID”);

}

*/

if (event.session.new) {

onSessionStarted({requestId: event.request.requestId}, event.session);

}

if (event.request.type === “LaunchRequest”) {

onLaunch(event.request,

event.session,

function callback(sessionAttributes, speechletResponse) {

context.succeed(buildResponse(sessionAttributes, speechletResponse));

});

} else if (event.request.type === “IntentRequest”) {

onIntent(event.request,

event.session,

function callback(sessionAttributes, speechletResponse) {

context.succeed(buildResponse(sessionAttributes, speechletResponse));

});

} else if (event.request.type === “SessionEndedRequest”) {

onSessionEnded(event.request, event.session);

context.succeed();

}

} catch (e) {

context.fail(“Exception: ” + e);

}

};

All of this code is auto generated by the sample skill kit development. The important thing to note in this section is that there are three functions; LaunchRequest, IntentRequest, and SessionEndedRequest. The LaunchRequest calls onLaunch which we must define later in the code. The IntentRequest calls onIntent which parses the utterances mappings to intances which we defined earlier. The last section is SessionEndedRequest which calls onSessionEnded. This cleans up any locks or variables that we have created and gets everything ready for the next instance creation.

/**

* Called when the session starts.

*/

function onSessionStarted(sessionStartedRequest, session) {

console.log(“onSessionStarted requestId=” + sessionStartedRequest.requestId +

“, sessionId=” + session.sessionId);

}

The onSessionStarted basically only logs the fact that the skill was called. We don’t really do more than logging when things are created.

/**

* Called when the user launches the skill without specifying what they want.

*/

function onLaunch(launchRequest, session, callback) {

console.log(“onLaunch requestId=” + launchRequest.requestId +

“, sessionId=” + session.sessionId);

// Dispatch to your skill’s launch.

getWelcomeResponse(callback);

}

onLaunch is called if the user launches the skill with no utterances. This then logs the session creation and calls the getWelcomeResponse function. We could include the getWelcomeResponse code in this function or ask for an utterance before doing anything.

/**

* Called when the user specifies an intent for this skill.

*/

function onIntent(intentRequest, session, callback) {

console.log(“onIntent requestId=” + intentRequest.requestId +

“, sessionId=” + session.sessionId);

var intent = intentRequest.intent,

intentName = intentRequest.intent.name;

// Dispatch to your skill’s intent handlers

if (“EagleIntent” === intentName) {

EagleSession(intent, session, callback);

} else if (“GraduationIntent” === intentName) {

GraduationSession(intent, session, callback);

} else if (“AMAZON.HelpIntent” === intentName) {

getWelcomeResponse(callback);

} else {

throw “Invalid intent”;

}

}

The onIntent function basically maps the utterances that we defined in the Alexa Skills Kit and directs the utterance to a subroutine call. Remember that we created two instances and two utterances. “eagle” calls the “EagleIntent” and “graduation” calls the “GraduationIntent”. These are defined in the Interaction Model section and are implemented in this code. We also have a default AMAZON.HelpIntent if you ask for help. If you say anything else you get an error with an exception of “invalid intent”.

/**

* Called when the user ends the session.

* Is not called when the skill returns shouldEndSession=true.

*/

function onSessionEnded(sessionEndedRequest, session) {

console.log(“onSessionEnded requestId=” + sessionEndedRequest.requestId +

“, sessionId=” + session.sessionId);

// Add cleanup logic here

}

The onSEssionEnded does not need to clean up anything so it only logs that the skill exits for debugging purposes.

// ————— Functions that control the skill’s behavior ———————–

function getWelcomeResponse(callback) {

// If we wanted to initialize the session to have some attributes we could add those here.

var sessionAttributes = {};

var cardTitle = “Welcome”;

var today = new Date();

var months = new Array(‘January’, ‘February’, ‘March’, ‘April’, ‘May’, ‘June’, ‘July’, ‘August’, ‘September’, ‘October’, ‘November’, ‘December’)

var month = months[today.getMonth()];

var day = today.getDate();

var target = new Date(2016,02,24,0,0);

var postfix = “th”;

// var endd = Date.parseExact(end, “2016-03-24”);

var diff = new Date(target – today);

var days = Math.round(diff/1000/60/60/24);

if (day == 1) { postfix = “st”; }

else if (day == 2) { postfix = “nd”; }

else if (day == 3) { postfix = “rd”; }

var graduation = new Date(2016,4,28,0,0);

var diff2 = new Date(graduation – today);

var speechOutput = “Ken only has ” + days + ” days left to finish his Eagle and ” + Math.round(diff2/1000/60/60/24) + ” days until graduation”;

// var speechOutput = “Ken only has ” + days + ” days left to finish his Eagle”;

// If the user either does not reply to the welcome message or says something that is not

// understood, they will be prompted again with this text.

var repromptText = “Today’s date is ” + month + ” ” + day + postfix;

var shouldEndSession = false;

callback(sessionAttributes,

buildSpeechletResponse(cardTitle, speechOutput, repromptText, shouldEndSession));

}

The getWelcomeResponse function is called from the help intent or onLaunch functions. This is the meat of the application. We define a few things like session attributes that can be passed to other functions and the card information for the app that runs on the phone or tablet complementing the Amazon Echo verbal interface.

The intent of this code is to print out the number of days between today and March 24th and today and May 28th. These two days are selected because the 24th is Ken’s birthday and the 28th is his graduation day. We first define what today is by calling the Java function Date(). We want to convert this date from the default date format into something that can be spoken. We first define an array of months that translates the numeric months (0..11) to words (January…December). We also pull out the day so that we can say that today is January 4th. The “th” is postfixed either at the 1st, 2nd, 3rd, or Xth for all other numbers.

We also define the target date as March 24th, 2016 with the Date(2016,2,24,0,0) function call. Note that we pass in the year 2016 but pass in 2 for the month. The months start with 0 for January so March is a 2 in this representation. We next pass in 24 for the day of the month. The zeroes represent hours and minutes. Since we only care about days, we zero out these values.

Once we know when our target is as well as today’s date we can take the difference of these two days and round down the difference to days. The actual date format is in milliseconds so we need to convert the delta into days. This is done by dividing by milliseconds (to get seconds), by seconds in a minute (to get minutes), by minutes in an hour (to get hours), and by hours in a day (to get days). The Math.round(diff/1000/60/60/24) performs this function and returns days difference between our target and today.

We also look at our second target, graduation, and calculate the diff2 for days till graduation from today.

The speechOutput definition is what will be said by the Amazon Echo. It is important to note that this should be natural speech and not raw numbers. We could say that “Today’s date is “ + today but this will be confusing to the person listening to the Echo. By converting this to natural language 01-12-2016 translates into January 12th.

The repromptText is what is used if the routine is recalled or you ask Alexa to repeat what was said. It is typically a shorthand for what was initially said. In our example the getWelcomeResponse by default sayd the number of days until Ken’s 18th birthday as well the number of days until his graduation.

The shouldEndSession variable is either true or false if you want to exit the skill after this function is executed. In this example, we do not want to exit after the getWelcomeResponse so we set the variable to false.

The last thing that we do is call buildSpeechletResponse which creates the audio output from the speechOutput and the application output with the cardTitle. If the shouldEndSession is true, the skill exits. If it is false, we wait for an utterance to call an intent.

function EagleSession(intent, session, callback) {

var sessionAttributes = {};

var cardTitle = “Welcome”;

var today = new Date();

var months = new Array(‘January’, ‘February’, ‘March’, ‘April’, ‘May’, ‘June’, ‘July’, ‘August’, ‘September’, ‘October’, ‘November’, ‘December’)

var month = months[today.getMonth()];

var day = today.getDate();

var target = new Date(2016,02,24,0,0);

var postfix = “th”;

// var endd = Date.parseExact(end, “2016-03-24”);

var diff = new Date(target – today);

var days = Math.round(diff/1000/60/60/24);

if (day == 1) { postfix = “st”; }

else if (day == 2) { postfix = “nd”; }

else if (day == 3) { postfix = “rd”; }

var graduation = new Date(2016,4,28,0,0);

var diff2 = new Date(graduation – today);

var speechOutput = “Ken only has ” + days + ” days left to finish his Eagle”;

// var speechOutput = “Ken only has ” + days + ” days left to finish his Eagle”;

// If the user either does not reply to the welcome message or says something that is not

// understood, they will be prompted again with this text.

var repromptText = “Today’s date is ” + month + ” ” + day + postfix ;

var shouldEndSession = true;

callback(sessionAttributes,

buildSpeechletResponse(cardTitle, speechOutput, repromptText, shouldEndSession));

}

The EagleSession is the routine called from the EagleIntent. The key difference for this function is what is returned for the speechOutput. In this function we only report the days till his 18th birthday and not his graduation date. Note that we calculate the graduation delta but do not print it out. This was done because we basically copied the previous code and dropped it into this function changing only the speechOutput.

function GraduationSession(intent, session, callback) {

var sessionAttributes = {};

var cardTitle = “Welcome”;

var today = new Date();

var months = new Array(‘January’, ‘February’, ‘March’, ‘April’, ‘May’, ‘June’, ‘July’, ‘August’, ‘September’, ‘October’, ‘November’, ‘December’)

var month = months[today.getMonth()];

var day = today.getDate();

var target = new Date(2016,02,24,0,0);

var postfix = “th”;

// var endd = Date.parseExact(end, “2016-03-24”);

var diff = new Date(target – today);

var days = Math.round(diff/1000/60/60/24);

if (day == 1) { postfix = “st”; }

else if (day == 2) { postfix = “nd”; }

else if (day == 3) { postfix = “rd”; }

var graduation = new Date(2016,4,28,0,0);

var diff2 = new Date(graduation – today);

var speechOutput = “Ken only has ” + Math.round(diff2/1000/60/60/24) + ” days left till graduation”;

// var speechOutput = “Ken only has ” + days + ” days left to finish his Eagle”;

// If the user either does not reply to the welcome message or says something that is not

// understood, they will be prompted again with this text.

var repromptText = “Today’s date is ” + month + ” ” + day + postfix ;

var shouldEndSession = true;

callback(sessionAttributes,

buildSpeechletResponse(cardTitle, speechOutput, repromptText, shouldEndSession));

}

The GraduationSession again is the exact same code with the speechOutput changed. Note that we also add shouldEndSession set to true to exit if you ask about graduation. This was randomly done here just to test the feature if called.

function buildSpeechletResponse(title, output, repromptText, shouldEndSession) {

return {

outputSpeech: {

type: “PlainText”,

text: output

},

card: {

type: “Simple”,

title: “SessionSpeechlet – ” + title,

content: “SessionSpeechlet – ” + output

},

reprompt: {

outputSpeech: {

type: “PlainText”,

text: repromptText

}

},

shouldEndSession: shouldEndSession

};

}

The buildSpeechletResponse function builds what Alexa will say upon return from an Intent call. The code is returned as a JSON structure using the output (or speechOutput) defined by the calling routine.

function buildResponse(sessionAttributes, speechletResponse) {

return {

version: “1.0”,

sessionAttributes: sessionAttributes,

response: speechletResponse

};

}

The buildResponse is what is finally returned to the Echo through the Alexa Skill Kit. This is a JSON structure containing all of the data needed to create the card and speak the response through the speakers.

Overall this example is very simple. The only complex part of the code is defining two dates, getting today’s date, and calculating the delta between the two dates and today’s date. I suggest that if you want to play with this code, add a third Intent. Pick a target date like July 4th and see how many days are between today and July 4th. A simple hint is that that target date should be new Date(2016,6,4,0,0). You might need to create a couple of utterances because some people say “the 4th of July” and others say “July 4th”. If you want to get really complex, pick something like Valentines Day or Easter as your target and figure out what day that is by making a search call to a web page. If you type in “when is easter 2016” in a Google search, Google returns Sunday, March 27 embedded into a web page. The challenge in this request is mapping a web page search to a web page answer into a Date() function call for the date returned. Once you figure out how to translate this natural language query into a computer query you can make a generic query of how many days till…. and a generic Alexa Skill.