HashiCorp is holding their annual users conference online this year and I will be attending virtually to learn what is new and being announced around Terraform. The conference is a two day conference starting Oct 14th and runs through Oct 15th as well as two days of workshops on the 12th and 13th. This blog will cover part of the full schedule since not all of the presentations are Terraform centric.

HashiConf Digital Opening Keynote

The introduction keynote was interesting with conference shots from the presenter’s homes. The number of attendees (12K) and new number of employees (1K) were interesting numbers. The rest was mostly marketing information about HashiCorp. Some interesting facts: 1K enterprise customers, 6K new users/month, growing with cloud partners and technology partners. Certification program – http://hashicorp.com/certification. Learning program – http://learn.hashicorp.com

Vault as a Security Platform & Future Direction

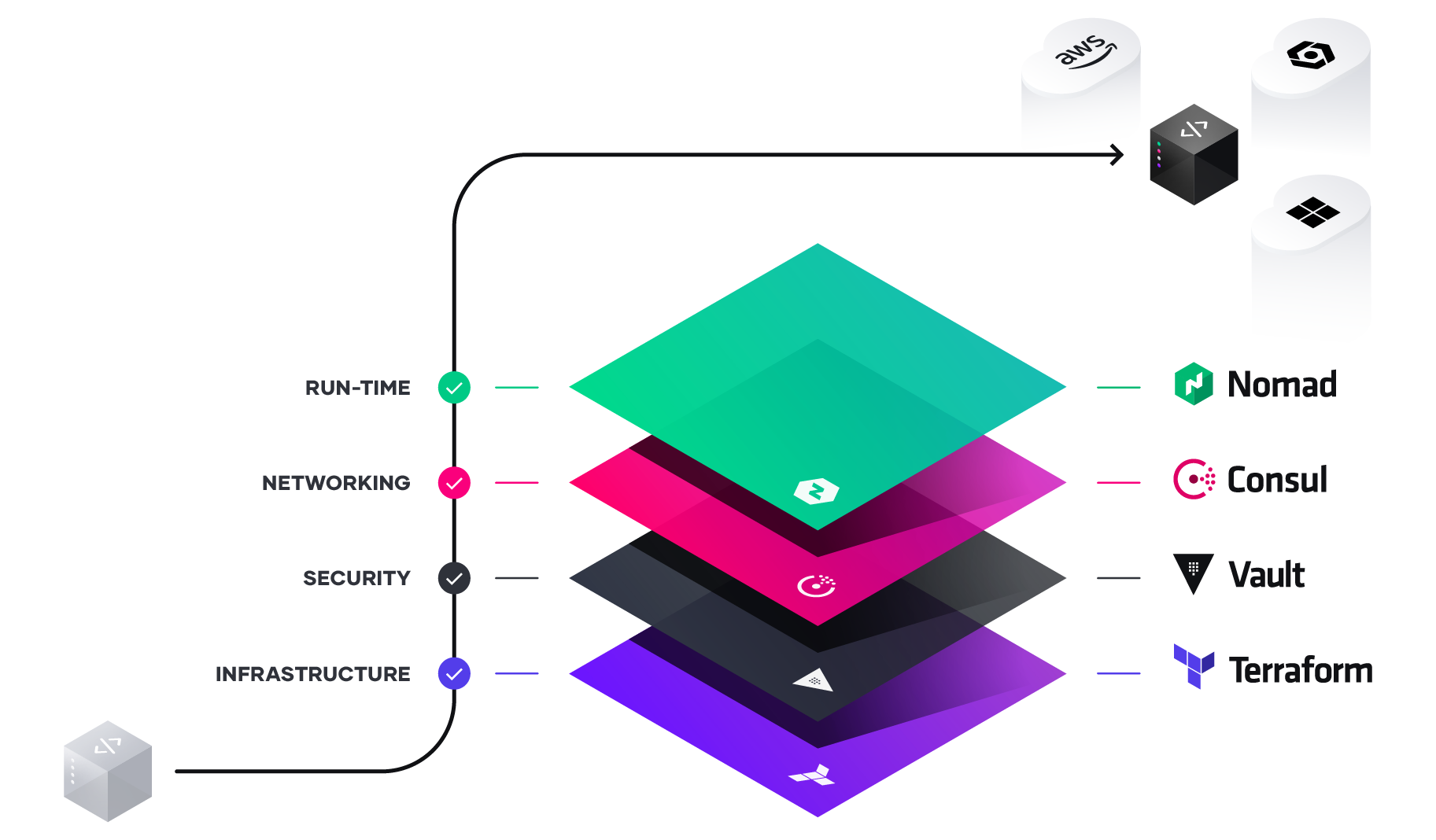

Vault is the security layer on top of Terraform and allows storage of security and secrets for Kubernetes and other platforms in a secure manner. The bulk of downloads last year was a combination of Vault in conjunction with Kubernetes. The discussion continued from a banking customer that used Vault to store API keys, Certificates, as well as username/passwords. Vault also allows for automation or key rotation and X.509 certificates to be dynamically assigned and consumed.

Options for running Vault – traditional way of download and run as well as SaaS in the HashiCorp Cloud Platform. New announcement of Vault on AWS as a service.

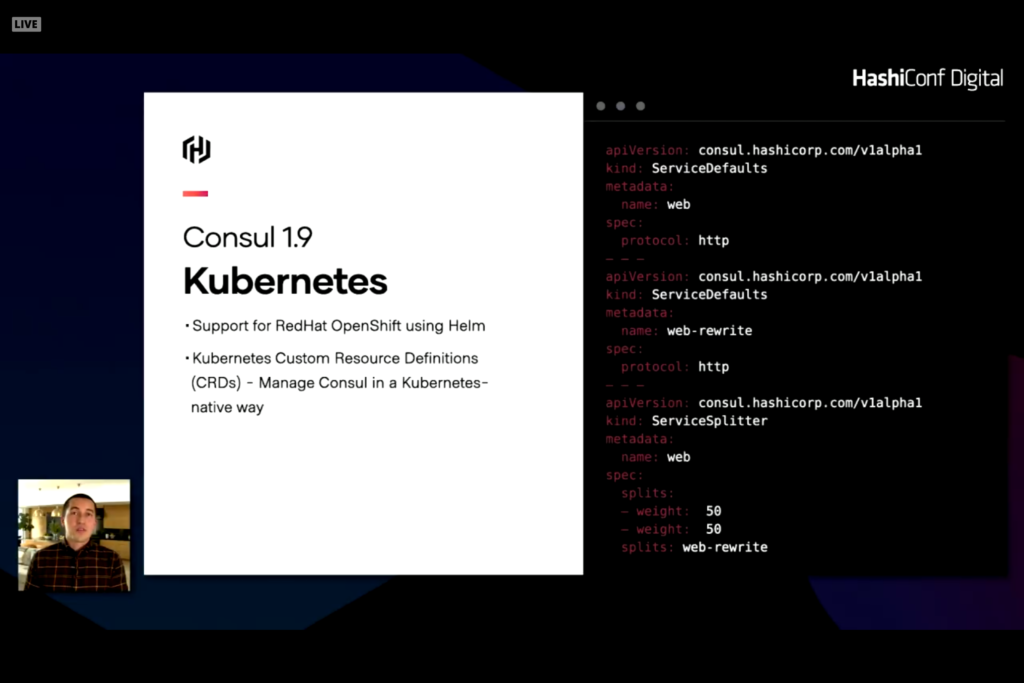

Consul is an extension of Vault allowing for network infrastructure automation that includes service discovery as well as access rights, authorization, and connection health. Consul can reconfigure and change on-premises server like Cisco and cloud network configurations like load balancers, network security rules, and firewalls. New announcement of Consul on AWS as a service as well as Consul 1.9 with significant enhancements for Kubernetes

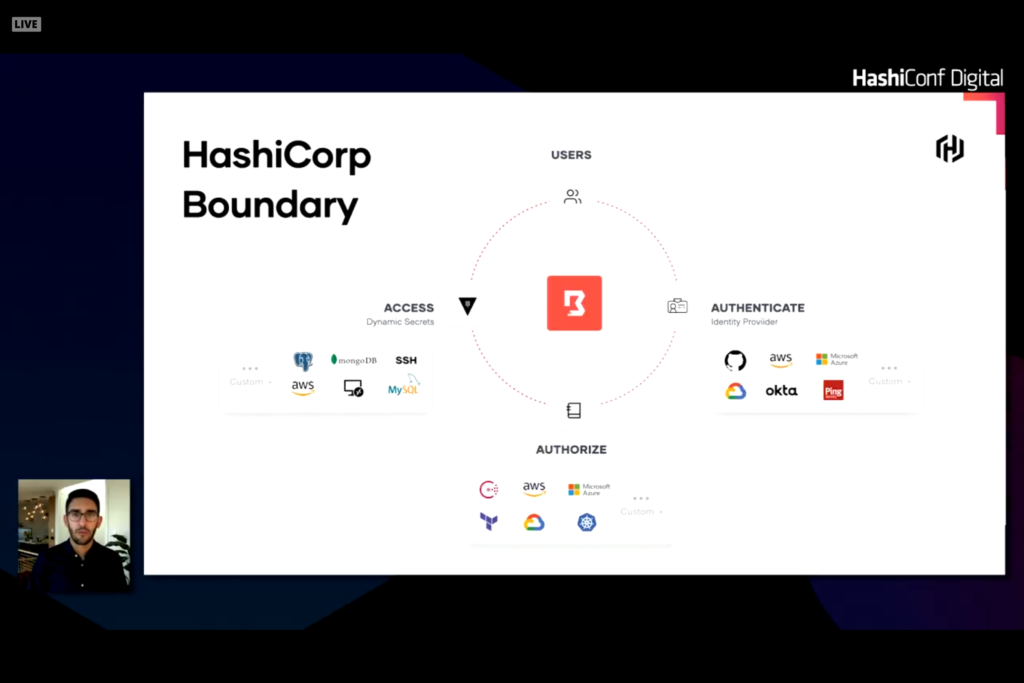

Human Authentication and Authorization is another layer that can cause problems or issues with system configuration and automation. Traditional products like Active Directory or LDAP for on-premises or Okta or AzureAD for cloud credentials can be leveraged to provide auth and authz resources. The trick is how to leverage these trusted sources into servers and services. Traditionally this was done with SSH keys or VPN credentials with secure network and known IP addresses or hostnames. With dynamic services and hosts this connection becomes difficult. Leveraging services like Okta or AzureAD and role based access for users or services is a better way of solving this problem. Credentials can be dynamically assigned to role and rotated as needed. The back end servers and services can verify these credentials with the auth service to verify authorization for the user or role for access. HashiCorp Boundary provides the linkages to make this work.

Boundary establishes a plugable identity provider into authentication source to verify user identities. A second set of plugables connect to an authorization source and integrates with HashiCorp Vault to access services with stored secrets allowing secrets to be rotated and dynamic.

Vault as a Security Platform and Future Direction

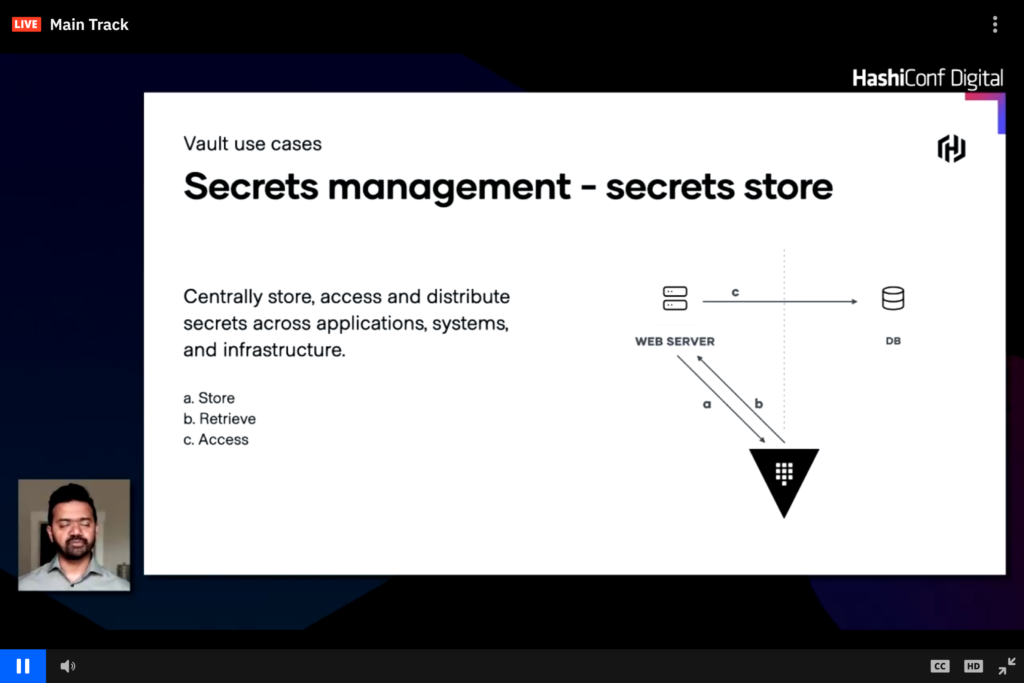

Vault centrally stores secrets for infrastructure

Vault can centrally store username and passwords, public and private keys, as well as other dynamic or secure credentials. In the image above a web server pulls the database credentials from Vault rather than storing it in code or config files and the webserver can use these dynamic credentials to connect to a database. This workflow can easily change and have the webserver request credentials from Vault and Vault connects to the database to generate a short lived auth token which is then passed back to Vault and then to the web server.

Building a Self-service vending machine to streamline multi-AWS account strategy

The presentation was from Eventbrite describing how they use Terraform and the HashiStack to manage AWS and a multi-account AWS solution. Multiple AWS accounts are needed to isolate different domains and solutions. Security can be controlled across all accounts through automation. The AWS Terraform Landing Zone (TLZ) quickly became a solution. This product was introduced a year ago as a joint project between HashiCorp and Amazon.

The majority of the conversation was business justification for a multi-AWS account management requirement and how AWS Control Tower would not work. From the discussion and chat it appears that TLZ is still in beta and could potentially make things easier.

Terraform in Regulated Financial Services

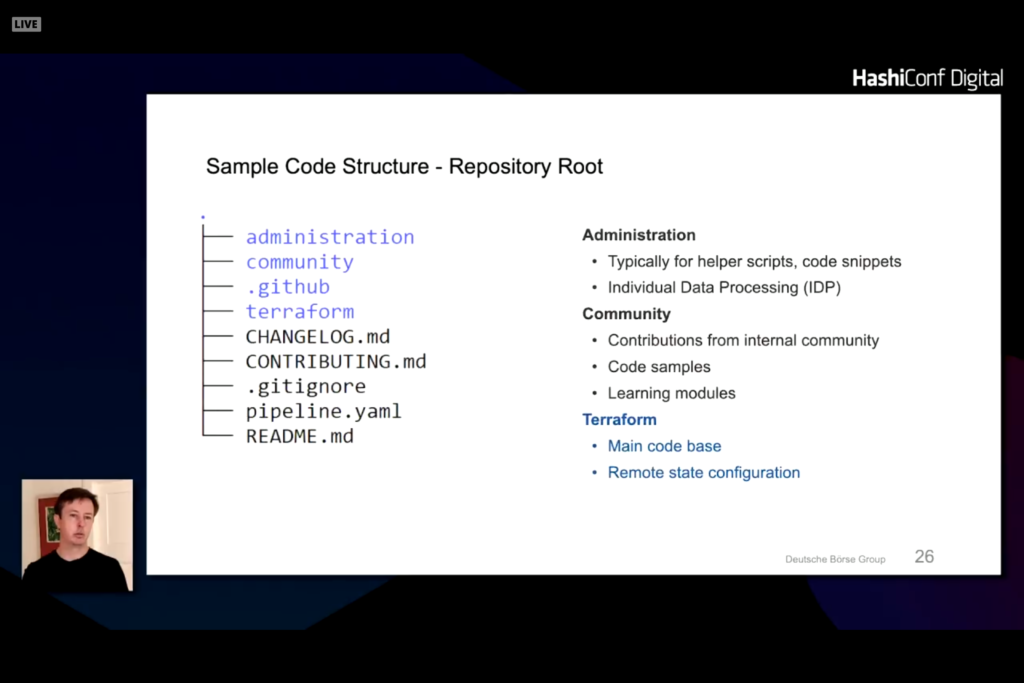

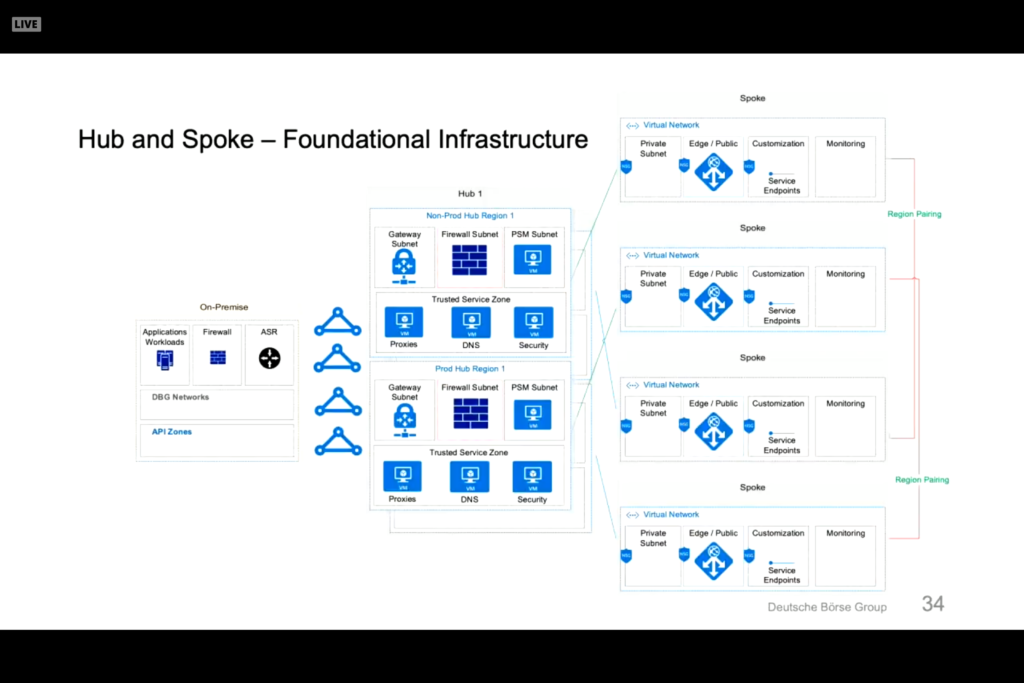

Customer presentation from Deutsche Boerse Group discussing Terraform deployment into AWS, Azure, and GCP. Fully automated electronic training application. Terraform and Packer foundation to building and managing systems. Infrastructure as Code (IaC) helps with regulation reporting and guidelines in the financial industry. The Terraform helps define uniform policies and procedures. Code is designed and split into product zones that represents different applications or functions.

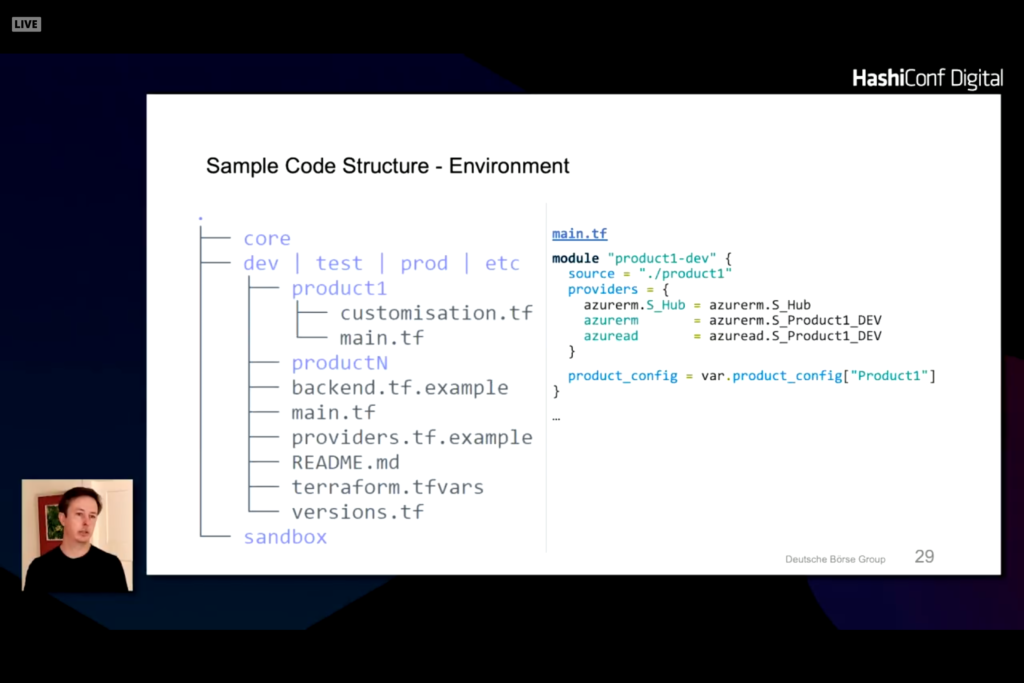

Under the terraform directory is a split of dev, test, prod, and etc directories with product lists under each one.

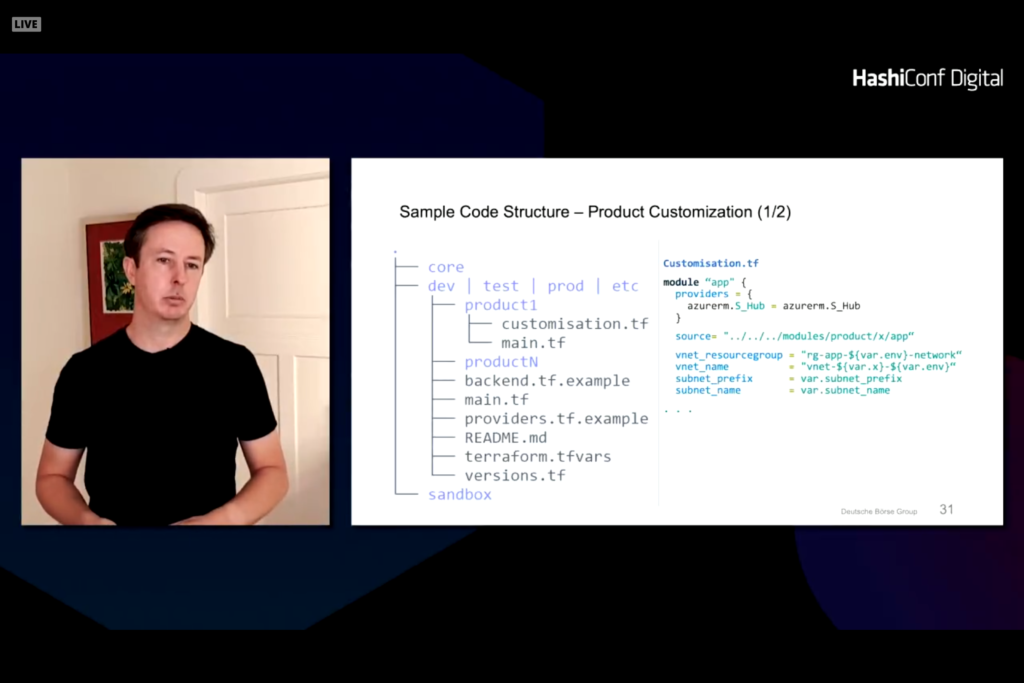

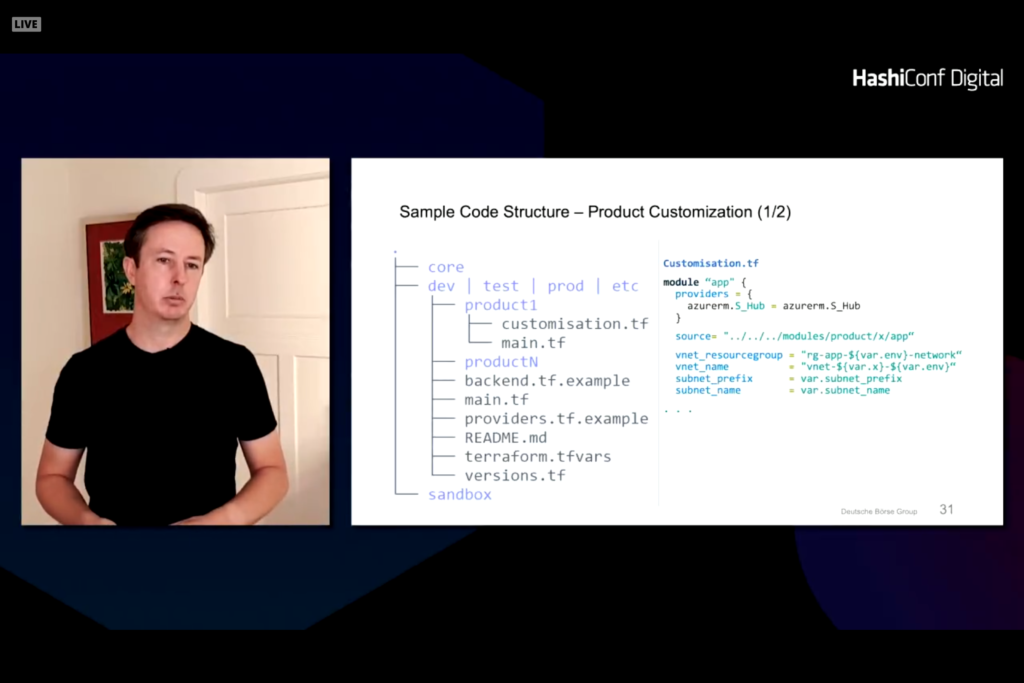

Note that there are a few structures that are common across all modules and there are specific product and class of service. Network controls are controlled through a central network definition. Customizations can be made to note changes that vary from the company policies and procedures.

A standard module for a hub can be defined for services like monitoring and network.

This results in a core module that is secure and compliant with environments.

Packer in layered on top of this to harden the operating system and provision customizations into each virtual machine. Ansible configures the machine and can deploy straight to a cloud provider through a private marketplace or personal template.

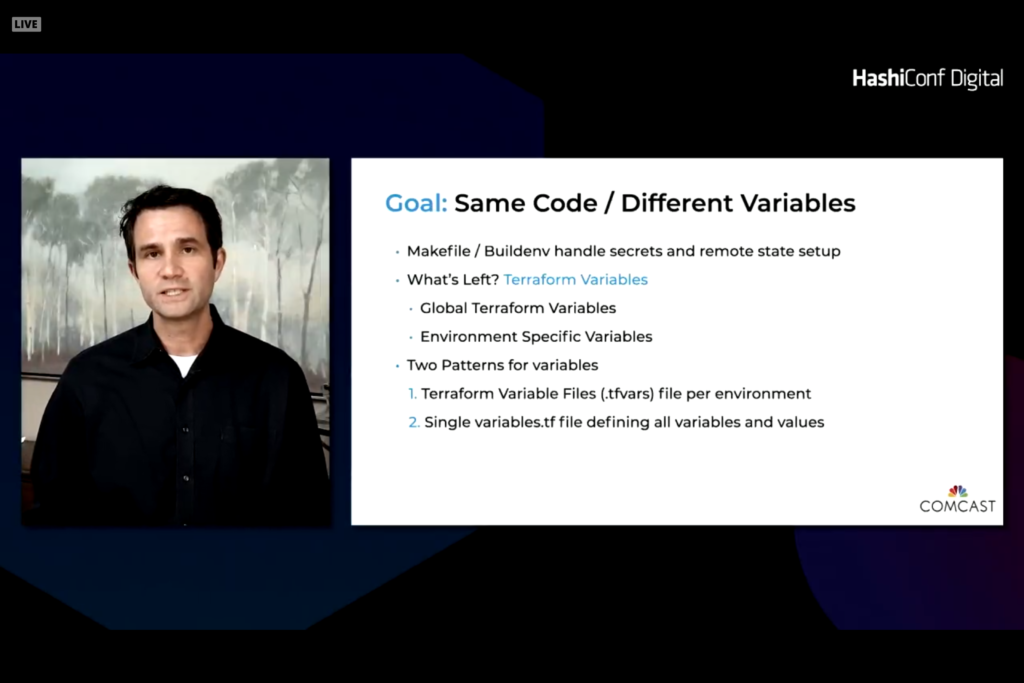

Terraform Consistent Development and Deployment

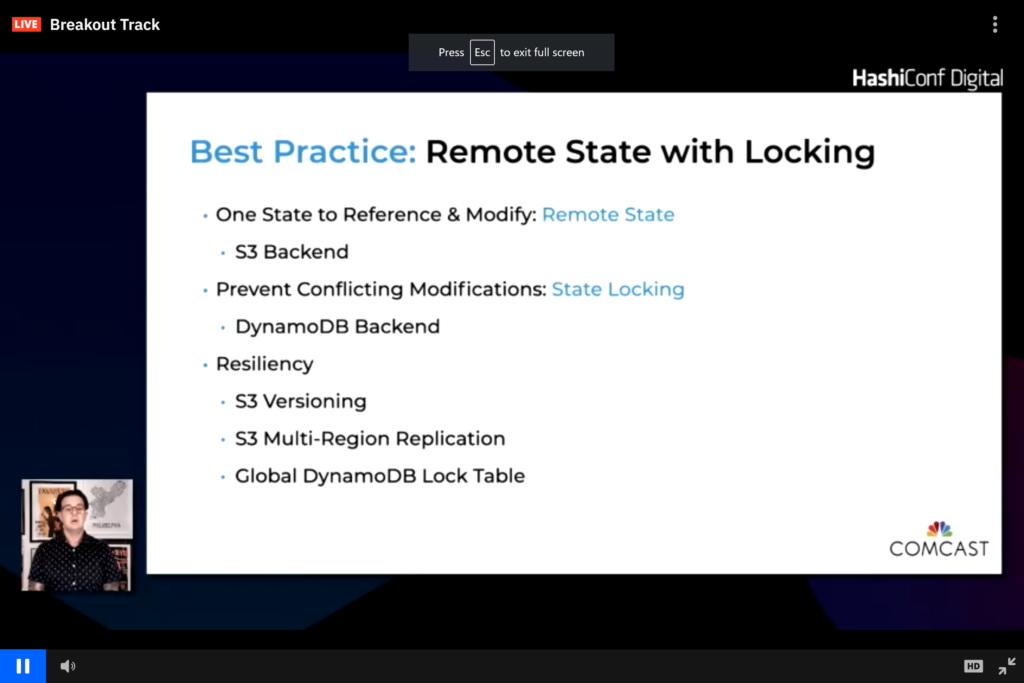

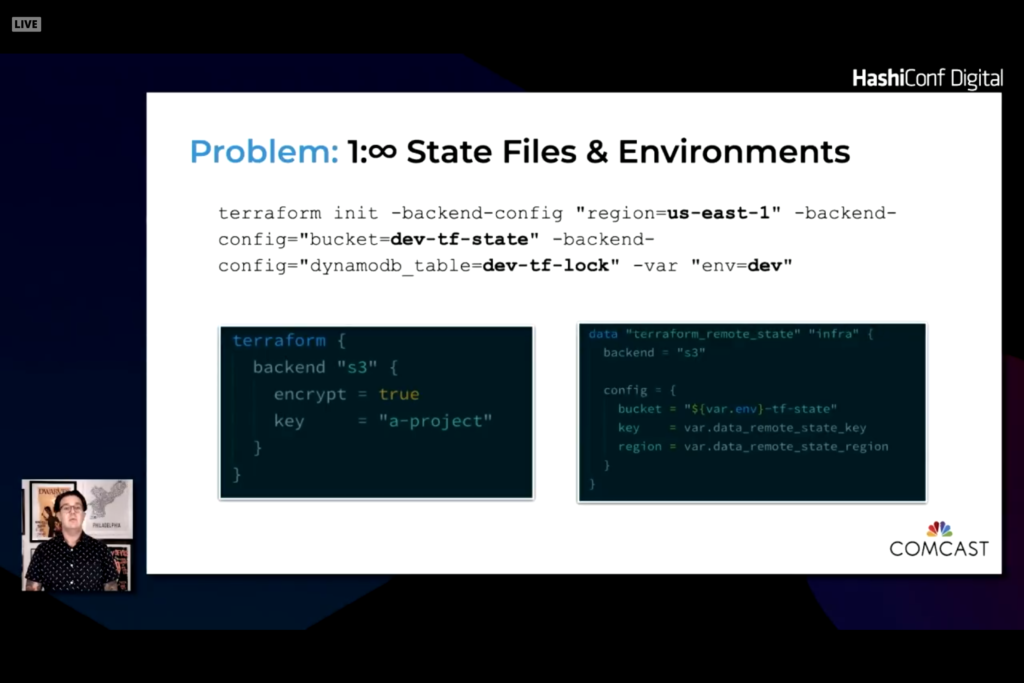

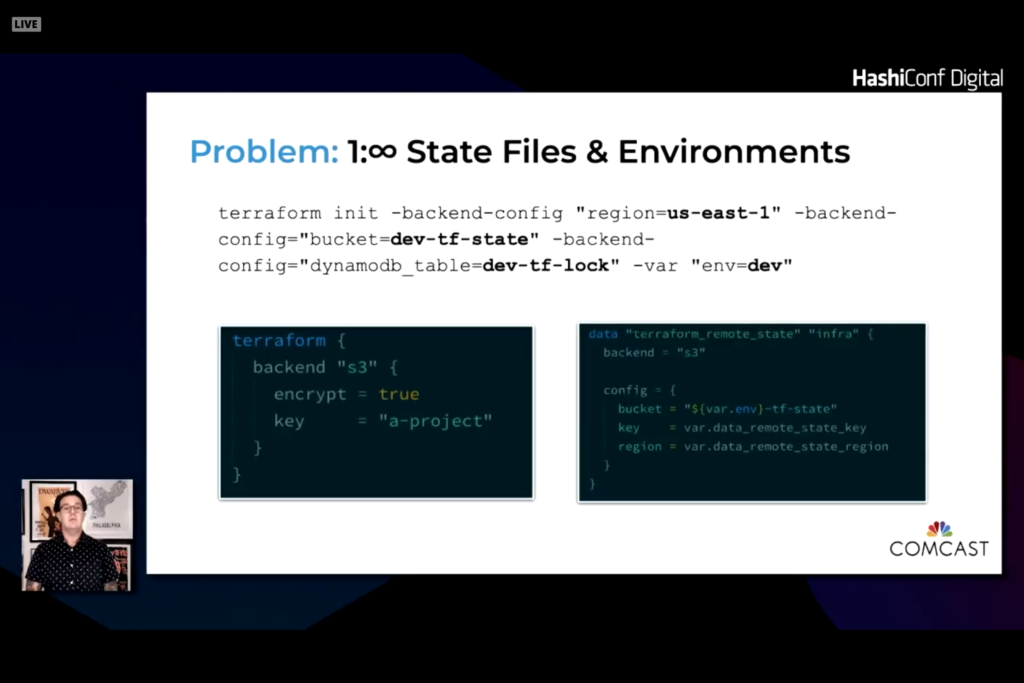

This presentation reviewed what Comcast has done with Terraform. The primary goals are consistency and accuracy. Having everyone run the same configuration and secrets helps reduce complexity. Secondary goal is to have dev, test, and prod configurations the same in different regions and locations.

Bootstrap is done from a Git repository then managed with cloud storage backend

State is stored and referenced from a common backend.

Use a makefile with targets to run the proper terraform command with the proper environment variables. This allows you to integrate state, Vault, and secrets on all desktops and in the CI/CD tool.

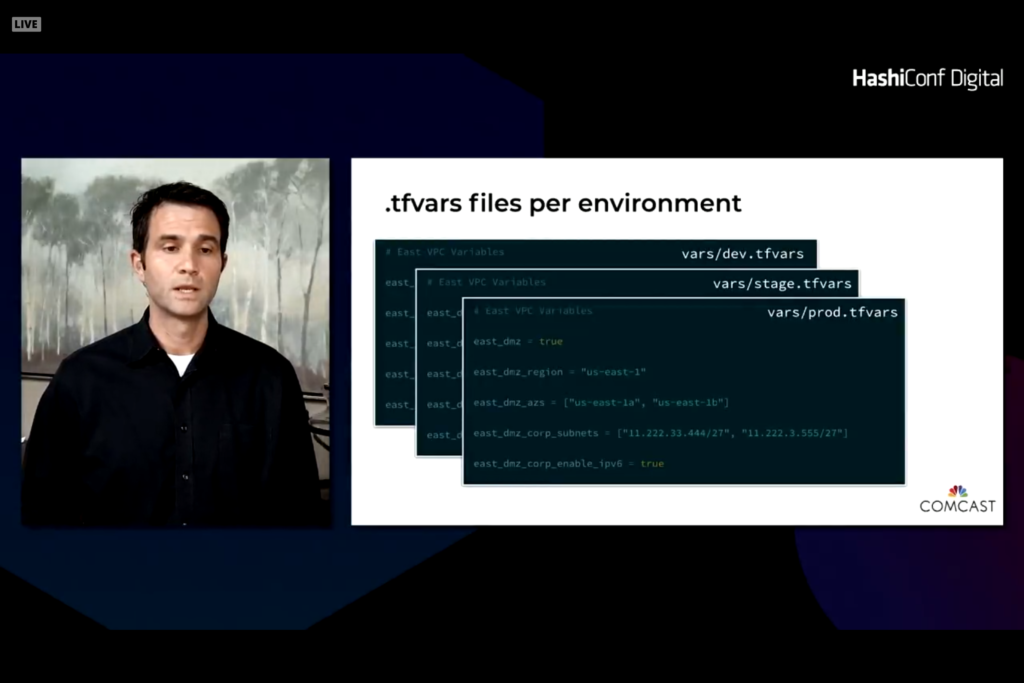

Two levels of variables. One that are specific to a platform. The second is global variables. It is easy to set defaults and override when needed. The difficulty is to compare two environments to see changes and differences.

With this module you end up with a vars folder and tfvars file unique to different environments. The Makefile pulls in the right value and ingests the desired tfvars file.

Remainder of presentations

The remainder of the presentations were Vault or Consul presentations. I primarily wanted to focus on Terraform deployments and presentations in this blog. More tomorrow given that day 2 is more Terraform focused.